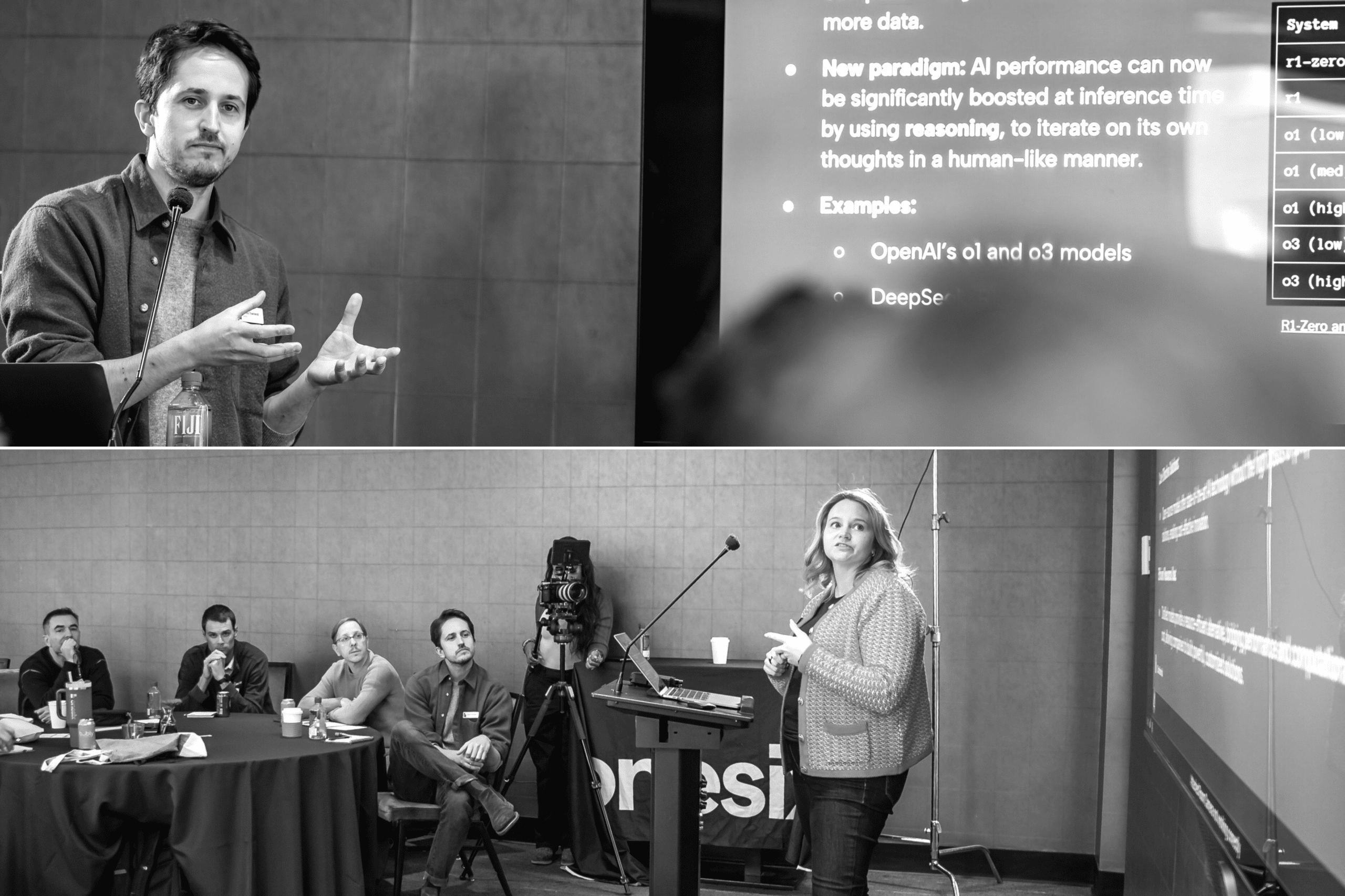

AI’s Next Big Shift: What Business Leaders Need to Know

Written by

James Townend & Nina Singer, Lead ML Scientists

Published

March 19, 2025

Artificial Intelligence continues to transform the tech landscape at breakneck speed. AI is driving innovation in every sector from how we process queries to the tools we use for automation. Below are five key trends shaping AI’s evolution in 2025—and why they matter.

1. Train Inference-Time Compute

Traditionally, AI performance scaled primarily with training-time compute: We spent more resources to train bigger models on more data. Now, inference-time compute—the compute spent when a trained model answers a query—has become a major new control lever.

Why It Matters

- Improved Reliability & Accuracy: By “thinking” more at inference time, AI systems produce more accurate and reliable outputs. This is analogous to a human spending extra time carefully reasoning about a difficult question.

- Efficiency Breakthroughs: Techniques like distillation, quantization, mixture of experts, and speculative decoding make inference economically viable at scale. The result: smaller, more nimble models can match larger counterparts by spending more compute only when needed.

- Dynamic Compute & Costs: Instead of paying a one-time bill for a model’s training, organizations can now allocate compute resources adaptively at inference, turning accuracy into a dynamic dial they can tune per task.

The Bigger Picture

As models shift more reasoning to real-time computation, the hardware and infrastructure for user-facing AI will need to scale to support these heavier inference workloads. This also opens opportunities for edge inference, which involves moving some computation onto devices like phones, robots, and IoT systems.

2. Enterprise Search Is Good Now

Enterprise search was an afterthought for years, plagued by siloed data sources, poorly structured documents, and lack of meaningful relevance signals. Modern vector embeddings have changed everything, making Retrieval-Augmented Generation (RAG) the new standard.

Why It Matters

- Universal Indexing: Embeddings enable universal representation across text, images, code, and audio. This single representation means we can embed all company knowledge—from PDFs to Slack messages—to power vector searches that find meaning, not just keywords.

- RAG = Answers, Not Documents: Retrieval-Augmented Generation doesn’t merely list documents for you; it synthesizes relevant passages and provides direct answers. This is a historic change in how we interact with knowledge, moving from “look it up” to “converse with an expert.”

- Legacy Industries’ Data Goldmine: Law, finance, and healthcare companies are loaded with valuable documents. RAG helps them unlock insights buried in previously unsearchable data, boosting productivity and compliance.

The Bigger Picture

With vector search and RAG, enterprise search resembles a true domain-expert assistant. Organizations finally have the tools to leverage vast document stores efficiently. It’s akin to what Google did for the early public internet—now applied to private, internal data.

3. AI Agents

The next revolution in AI-driven automation is the rise of AI Agents: task-oriented, often autonomous systems that can robustly interact with software and data.

Why It Matters

- Dynamic Workflows: Thanks to large language models (LLMs) that adapt to changing inputs, agents can handle multi-step processes—like scheduling meetings, triaging support tickets, or retrieving and summarizing documents.

- Tool Calling: Agents don’t just parse text; they can make API calls, write and execute code, or even interact with user interfaces like humans (via Robotic Process Automation).

- Collaboration & Orchestration: In some setups, multiple agents with different specialties team up to solve complex problems. This can be powerful but also tricky to manage and debug.

Important Considerations

Agents remain unpredictable at times, owing to LLMs’ black-box nature. For critical systems:

- Keep humans in the loop.

- Impose guardrails and constraints.

- Maintain observability and real-time monitoring.

The Bigger Picture

We’ll see agents increasingly embedded in customer support, “low-code” software platforms, and legacy system integrations. However, organizations must weigh the potential for cost overruns (since agents call models often) against the productivity gains they deliver.

4. The Future of Openness

Competition among large language models is intensifying, and with it comes a surge in open-weight models. Alongside these publicly accessible models, distilled versions—trained to mimic larger “teacher” models—are emerging as credible, cost-effective alternatives.

Why It Matters

- Democratizing AI: Open-source projects like LLaMA and DeepSeek have narrowed the performance gap with proprietary models. This expanded accessibility fosters broader innovation, letting smaller players gain cutting-edge capabilities.

- Distilled Models: By training on outputs from a larger model, these compact spin-offs approach teacher-level performance at a fraction of the size and cost.

- Competitive Landscape: As open models get better, organizations face a clearer trade-off between paying for closed, premium APIs versus hosting open models in-house.

The Bigger Picture

Open-source foundational models empower companies and researchers worldwide to build specialized solutions without huge licensing fees. This explosion in open models not only accelerates AI adoption but also raises questions about responsible use, governance, and the sustainability of massive training runs.

5. Capability Overhang

“Capability overhang” describes a scenario in which technology’s potential outstrips its immediate adoption and integration. We’re already seeing this with LLMs, where industrial and societal constraints—such as regulatory hurdles, skills shortages, and legacy system inertia—lag behind the AI’s actual abilities.

Why It Matters

- Missed Opportunities: Industries that fail to integrate LLMs risk being left behind, as new entrants or forward-thinking competitors capitalize on AI-driven efficiencies.

- Democratization vs. Misuse: While the barriers to trying AI are dropping—anyone can spin up a prototype—this also gives bad actors access to powerful tools.

- Regulatory Gaps: Policymakers and ethicists must catch up to address issues like AI-driven misinformation, data privacy, and algorithmic bias.

The Bigger Picture

As AI’s capacity grows, the conversation shifts from “can we do it?” to “how should we do it responsibly?” The real power of LLMs will come from well-regulated, well-structured integrations that extend beyond flashy demos into meaningful, society-wide improvements.

Shaping the AI-Driven Future

From inference-time compute revolutionizing AI economics to enterprise search finally delivering on its promise, these five trends highlight a pivotal moment in AI’s evolution. Agents will streamline workflows, open-source models will democratize access, and the looming capability overhang challenges everyone—from entrepreneurs to regulators—to adapt responsibly.

As the AI frontier broadens, it’s up to us—innovators, policymakers, and everyday users—to steer its tremendous potential toward positive, inclusive progress. The question is no longer if AI can do something, but rather how we’ll harness its power to create lasting impact.

Get Started

Integrate these insights into your business strategy and make the most of AI and the power it has. OneSix can help you utilize emerging trends in AI and have first-hand experience of the impact it can have on your business.

Contact Us